Lab 04 - Camera: Cones detection

Robotics II

Poznan University of Technology, Institute of Robotics and Machine Intelligence

Laboratory 4: Cones detection using Camera sensor

Back to the course table of contents

In this course, you will train an object detection algorithm. Your object will be a cone.

Part I - train the cone detector

First, follow the interactive tutorial. It uses the YOLOv5 model for object detection and the MIT Driverless FS Team cones dataset. Remember to save exported onnx model from the final step.

Part II - build the inference pipeline

Now, your goal is to build the object detection pipeline to deal with cones position. Note that we can’t extract depth distance to object. Due to, in the course, we only estimate fake-distance based on mathematical calculations.

Tasks

Move the onnx model to the docker container. Set the absolute path to model in

RoboticsII-FSDS/config/config.yaml.Your workspace file is

scripts/vision_detector.py. Review its contents. You can execute it using the commandroslaunch fsds_roboticsII vision_detector.launch.Our model require input image in special format. Fill the

preprocessfunction insideYOLOOnnxDetectorclass. Steps:- transpose image from channel last representation to channel first

(HWC -> CHW), eg.

(608,608,3) -> (3,608,608). - swap color channels from BGR to RGB,

- convert array to

np.float32and rescale image values to <0,1>, - add additional 0 axis (convert image to batch), eg.

(608,608,3) -> (1, 3,608,608).

- transpose image from channel last representation to channel first

(HWC -> CHW), eg.

At the end of

camera_detection_callbackfuction, function, implement a loop over theboxesthat draw it onimarray.Uncomment steering node inside

vision_detector.launch. Run the script again. Observe the behaviour of racecar and object detection. Try to increase the performance of steering:- you can modify

vehicleparameters in the config,

- you can modify dummy-steering cut off

cone_too_small_threshparam,

- you can modify model postprocessing params:

conf_thresoriou_thres.

- you can modify

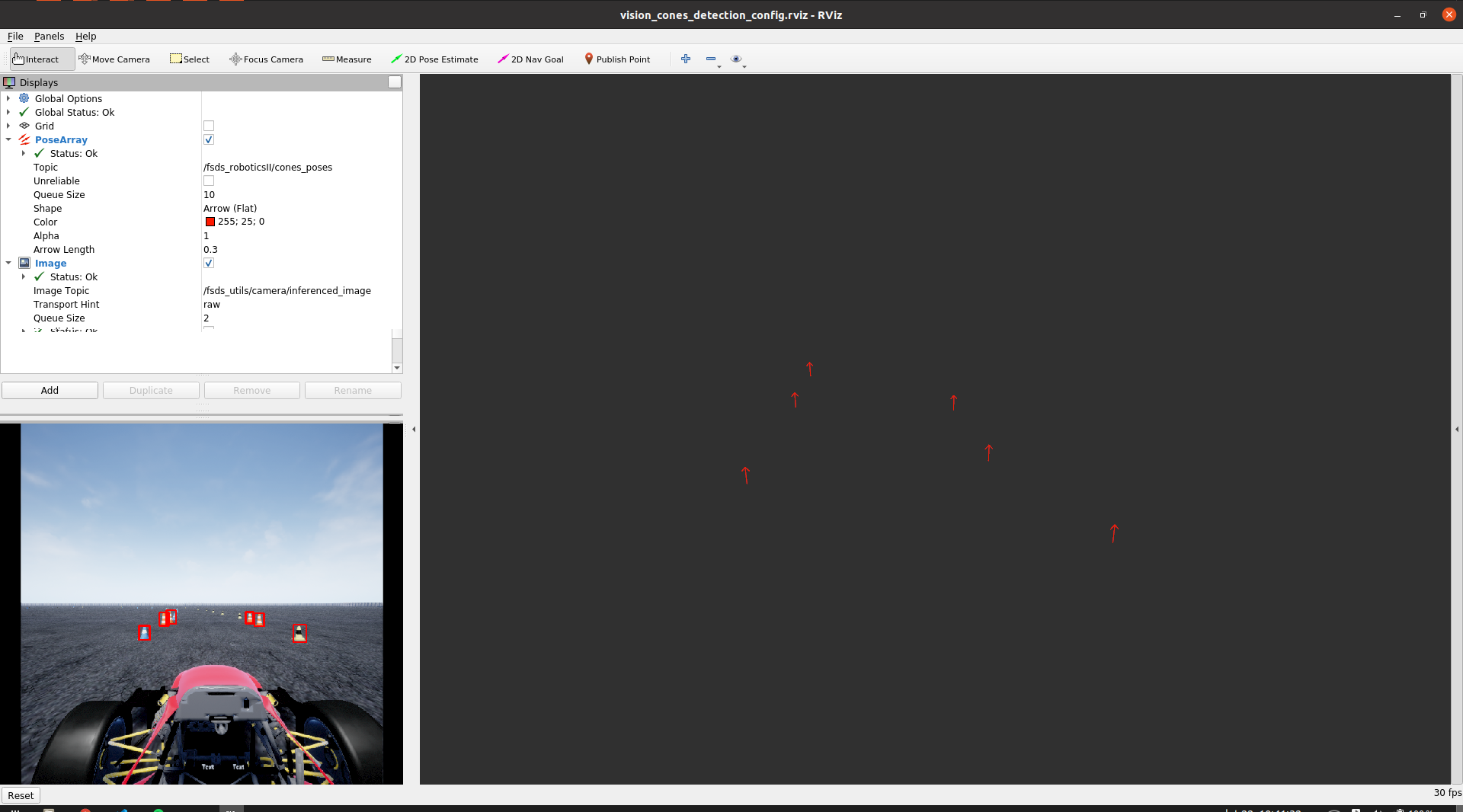

As a result, upload screenshot from the rviz tool to the eKursy platform.